I added in a bunch of stuff but showing off is kind hard right now… Gotta add in damage multipliers based upon difficulty level.

Side Profile(r)

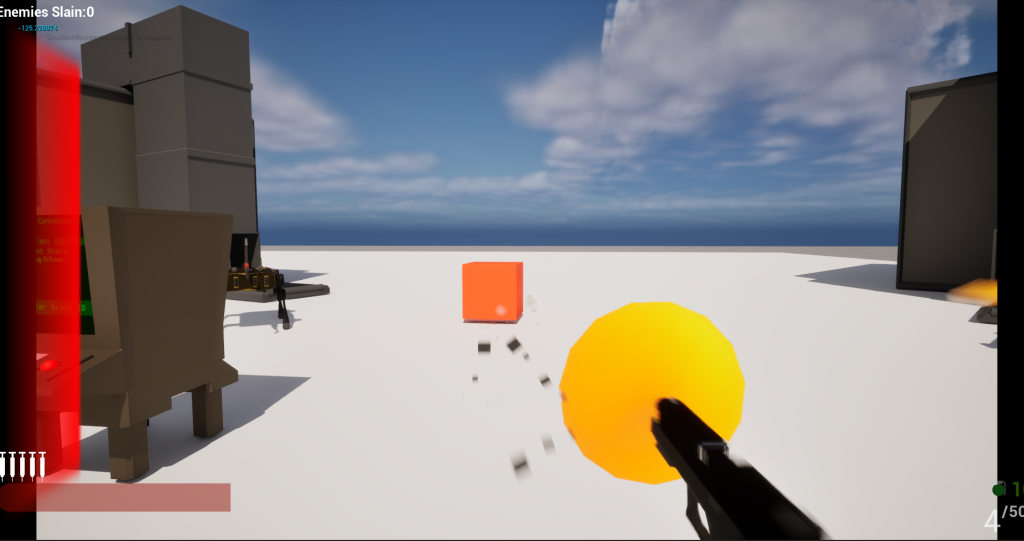

I closed the “Game Loop” by adding announcements to the hud and creating a “OnGameModeAnnouncement” event that huds can bind to: The look isn’t ideal but I’m working through it. In addition when I flipped things to “Standard” game mode (which has pretty much all of the bot types) my fps went from like 60 […]

Events are interrupts but in a make believe comp-sci context

Had a few learning experiences with Unreal when working on the global game mode settings stuff (Also I used the term “group ID” in the game but I call it “team ID” randomly so bare with me here and assume they’re the same thing). 1 – Event dispatchers kick off before the game tick (which […]

Failing to make a Alert Light

I wanted to spend 30ish minutes making the alert light I talked about last post before work: here’s where I got: At the end there the direction of the light is all wrong. Probably going to redo 100% of this later today (as it kinda looks bad anyways and a part of me wants the […]

Now they talk to each other!

It takes a bit to see but essentially what happened there was: This works by a hierarchy of multicast delegates (https://dev.epicgames.com/documentation/en-us/unreal-engine/multicast-delegates-in-unreal-engine) that passes the players known location from bot -> assembler -> game mode then then game mode alerts all of the assemblers and then alerts each bot. In other news I did a bunch […]

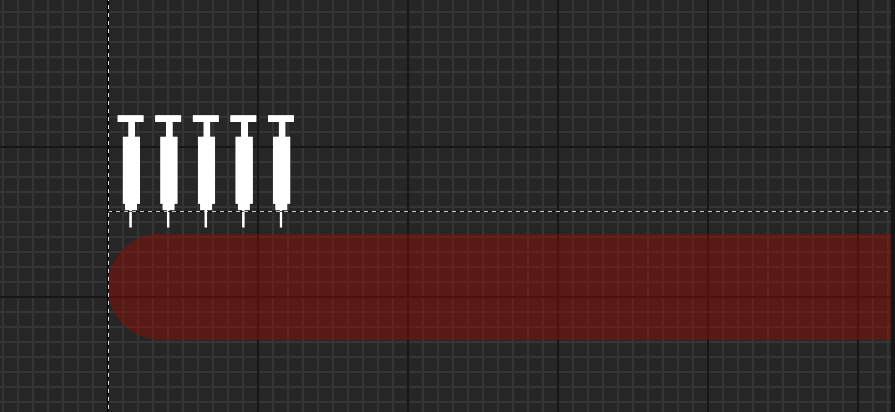

Sounds like its spraying but really its injecting

Finished up the health side. The injection sound I wanted to sound like a spray but it dosent sound great right now. I’ll mess with that later on.

Quick Hit

Added a syrette (syringe) bar to the hud and added a quick effect for pressing “V” which I’ll make the heal key. I think that combined with a little “psssh” sound will be enough to tell the user they healed.

Gameplay Gameplan (From a Gamefan during a self-made gamejam)

So I was looking back at the last thing that I made for planning and I already see the inherent problem is that I never made a “Game” sequence in addition to the boss building. In that vein what I was thinking is: This seems simple enough but there’s a few mechanics I do not […]

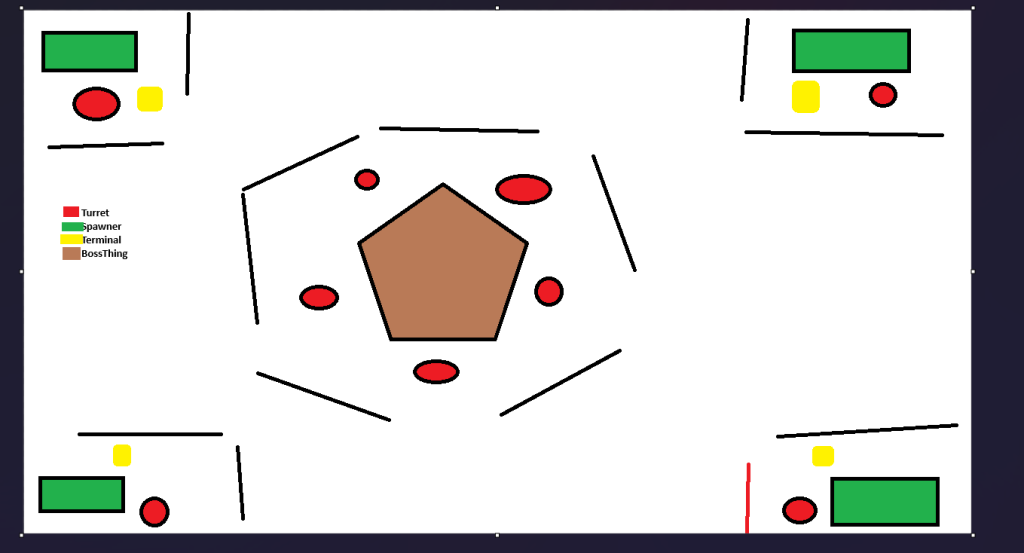

This might be too big

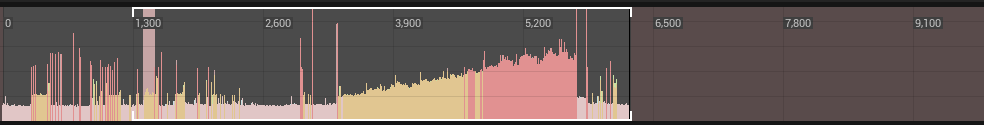

Working the level side of things….I might need to downscale this one. Also made a very long track that I want to compress So I seem to have a theme here…

Zap

Put the turret in game and nearly went deaf trying to make a good laser sound. I was trying to use electric with operator to make a good “wirrr ZAP” sound. But I ended up just making garbage that made my head hurt (Lower your speakers before playing) I also made a scorch mark decal […]