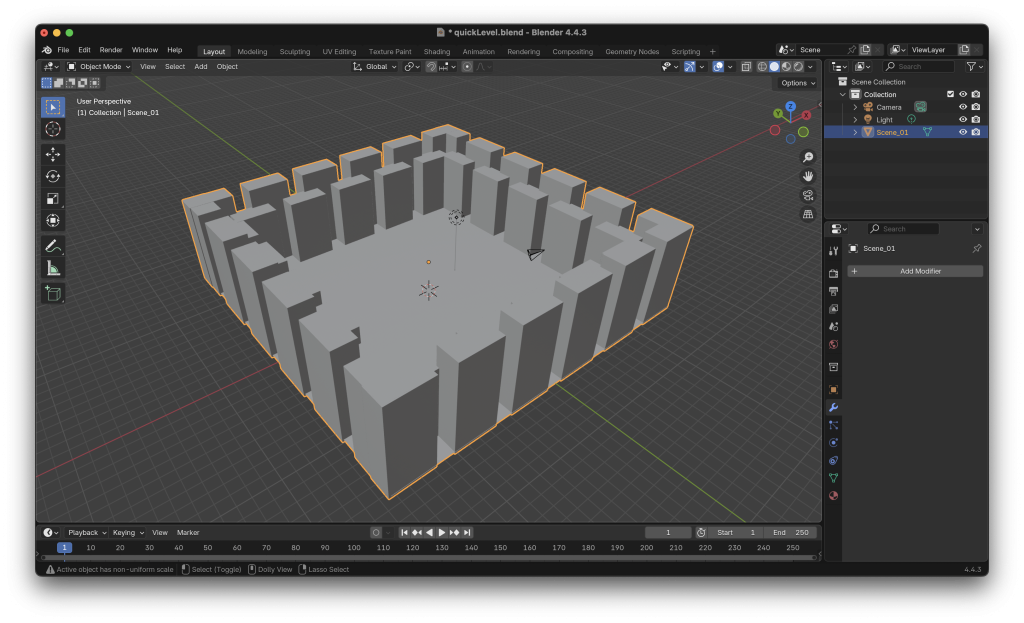

I’m constantly pondering what to do about the “boss room” for the game. Honestly I’ve been stumped on how to make it fun. In the meantime I’ve done a bunch of smaller things on the game such as: Another map I tried my hand at making a much much bigger map: The thought was that […]

Drone on

Made a drone in blender, going to try to get it in game at some point. The body still looks a bit too much like a cube so I’ll probably take another crack at adding details.

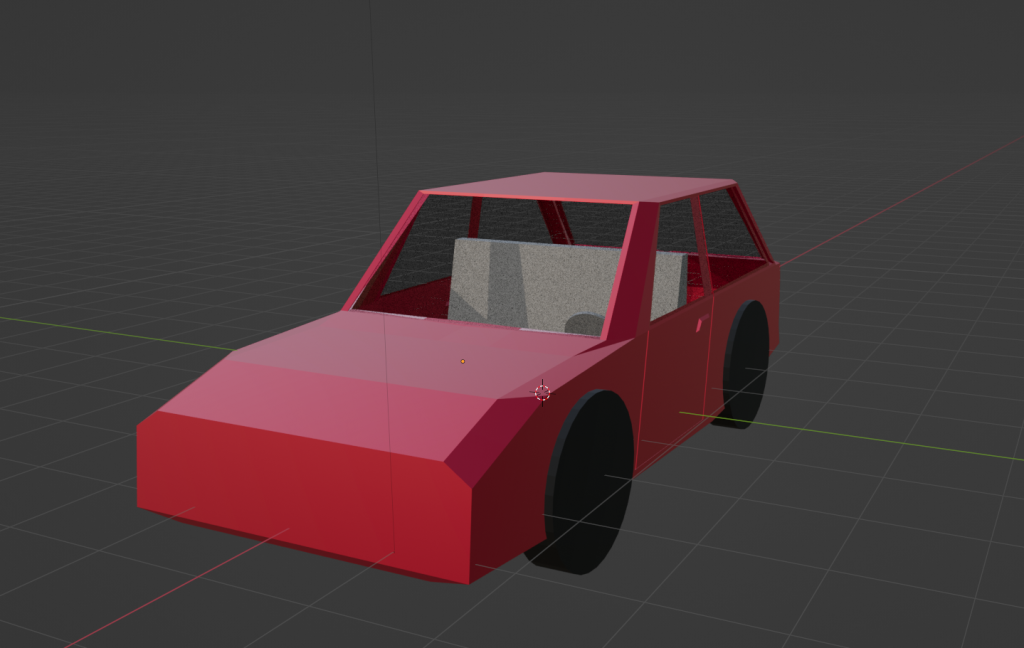

Car?

Getting the technique down to make a car. Gotta spend a few more hours on it, probably need to redo the topology also because: Breaking things down into quads always makes editing a bit cleaner, also the tires need work to be less just barrels. But really half of the battle here is the same […]

More 3D Less render

Started messing around with Bevy (https://bevy.org) which scratches some of the lower level itch I’m looking for but has enough utility things that I can spin up things kinda quick.In this case just made a first person flying camera and loaded in a scene I made in blender, rendered it red and added a light. […]

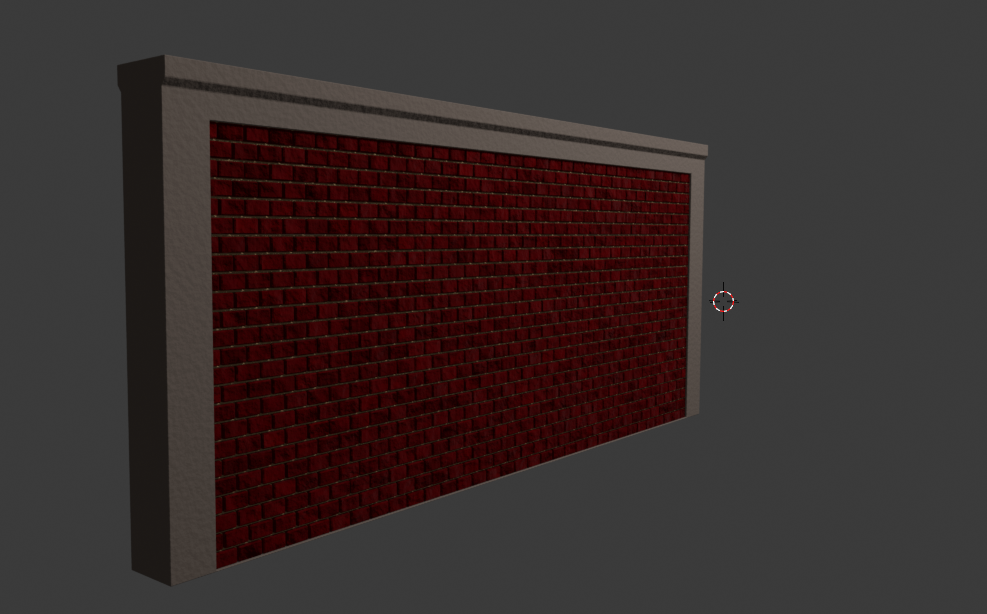

Brick by brick

Made a brick wall thats waaayyy to detailed to put in game: I did this after frustration from trying to make a brick wall just from a texture: Which looks much much worse. This was also my first major step into using geometry nodes in blender (see: https://docs.blender.org/manual/en/latest/modeling/geometry_nodes/index.html) which essentially let you make parameterized geometry […]

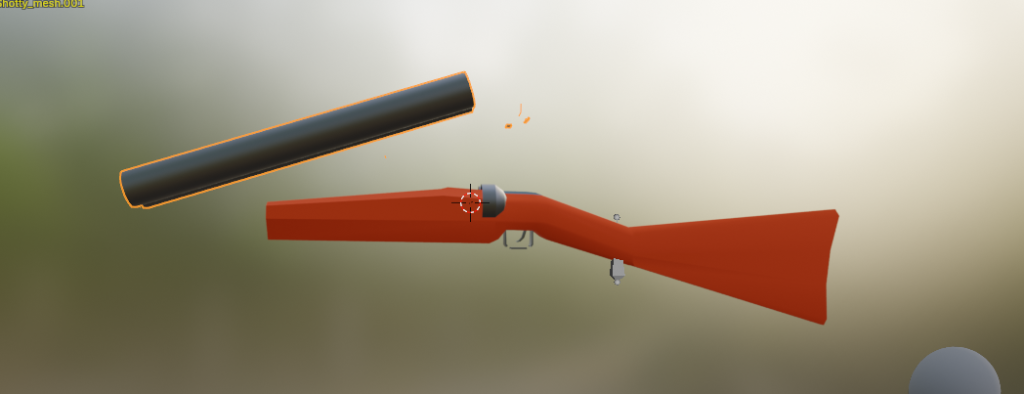

Touch-ups (also feat. boomstick)

Got the double barreled shotgun in game: I also added in some tracers to make shooting a bit better: On the back-end side I re-worked how damage functions, so now there’s falloff for shots: (Note the damage numbers in the corner). This should make things a bit better in terms of longer range engagements. I […]

“Time is the Enemy” (feat. boombstick)

Now that I’ve closed the gameplay loop I realized there’s really nothing pushing the player along. Right now if I just have “go destroy this thing” the gameplay would get stale very fast. To counter this I wanted to make some kinda timer that would force the player to go out and explore/accomplish their mission. […]

Failing to make a Alert Light

I wanted to spend 30ish minutes making the alert light I talked about last post before work: here’s where I got: At the end there the direction of the light is all wrong. Probably going to redo 100% of this later today (as it kinda looks bad anyways and a part of me wants the […]

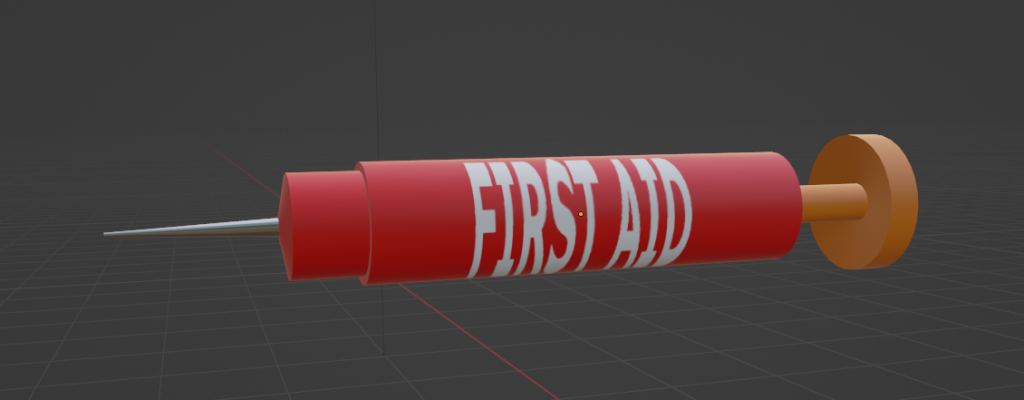

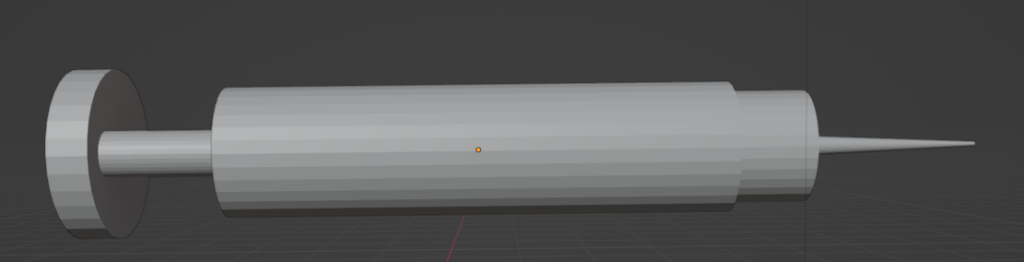

Medical Devices

Spent some time building this guy. What I’m going for is a quick box that holds and dispenses syringes. The glass on the right is supposed to be translucent (but that will be kind hard to see until I get it into unreal). Putting “First Aid” seems kind on the nose (no cool game design […]

Gameplay Gameplan (From a Gamefan during a self-made gamejam)

So I was looking back at the last thing that I made for planning and I already see the inherent problem is that I never made a “Game” sequence in addition to the boss building. In that vein what I was thinking is: This seems simple enough but there’s a few mechanics I do not […]