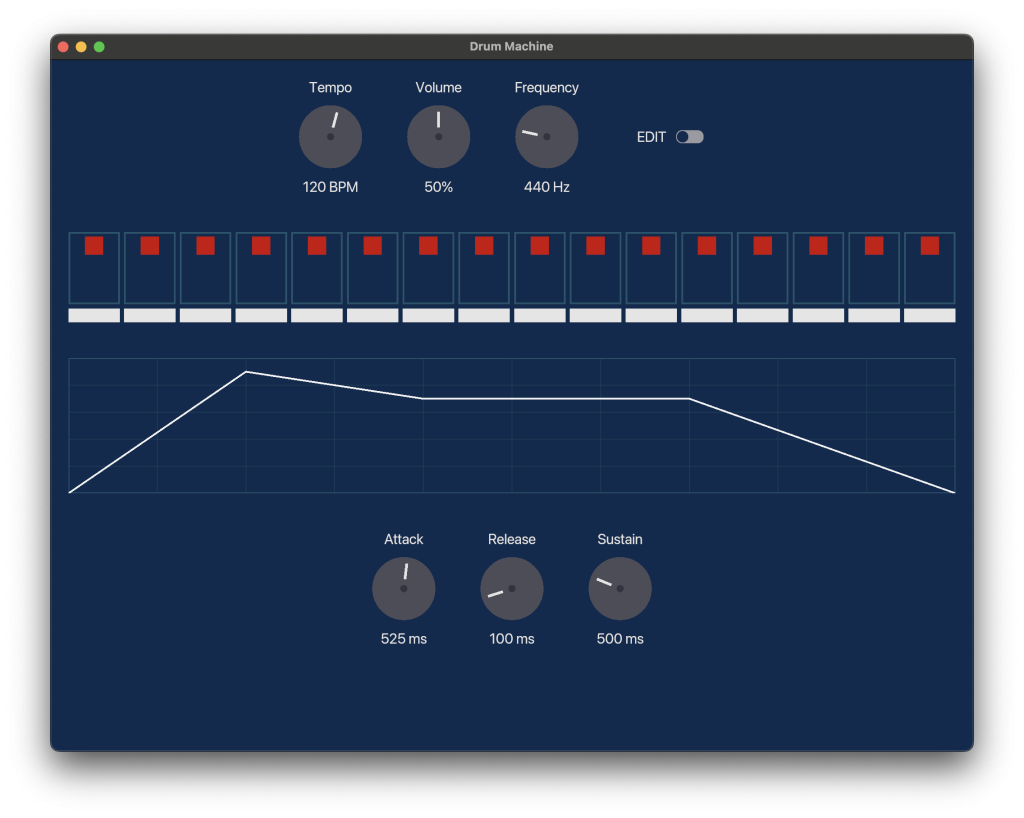

Making moves to make a simple drum machine app: It does not look good…Gotta clean that up, make it a bit more formal looking. I very much dislike the cartoony side of things. Honestly I think the audio side shouldn’t be that difficult, it’s really just a sinewave generator with a parallel array for the […]

Loop-da loop

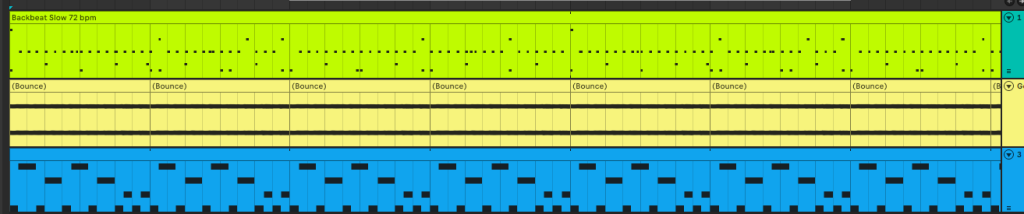

As it says in the title I’m pretty sure this is a beat from a cyberpunk 2077 track but I’m not sure which one…. Otherwise I added a sidechain compressor the way you’re “supposed” to in order to keep what you want emphasized within a bar emphasized. Essentially: Bass drum hits -> enable compressor (i.e. […]

It’s hot outside so here’s a cool loop

Lou-Oop

I had WAAAY more weird glitch effects on this one but I pulled it back. The snare still sounds too loud imo.

I found a preset that lets me rip off hotline miami’s soundtrack

If you don’t know what I am talking about: https://soundcloud.com/devolverdigital/sets/hotline-miami-official The synth are the little stabs in the background that are present after that drop sound thing.

Zap

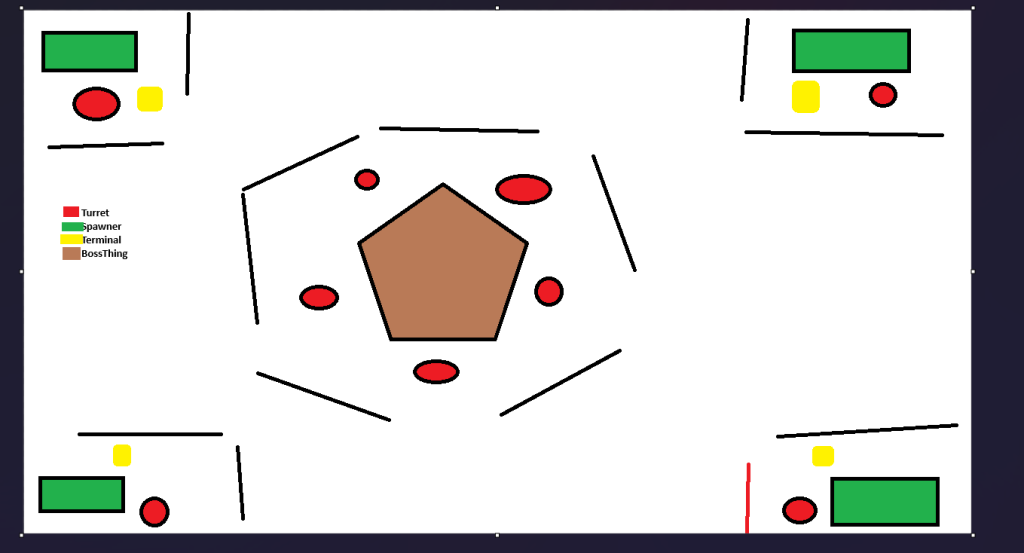

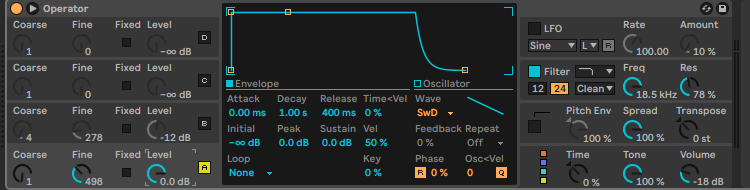

Put the turret in game and nearly went deaf trying to make a good laser sound. I was trying to use electric with operator to make a good “wirrr ZAP” sound. But I ended up just making garbage that made my head hurt (Lower your speakers before playing) I also made a scorch mark decal […]

I moved (LooppegMafia)

Flute it up

I went into this then I found this bass sound and just kinda rolled with it I found a sound a liked and rolled with it (it a bit loud fyi) Here’s the synths (where the concert flute is a sampler) The flute is surprisingly nice is from the Abelton orchestral woodwinds pack: https://www.ableton.com/en/packs/orchestral-woodwinds/. That […]

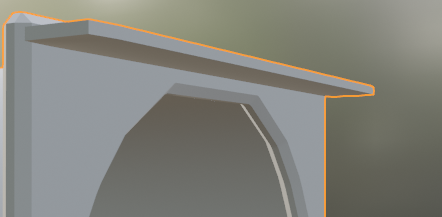

Loop-dee-Loop and Todo-s

I wanted to mesh paint (https://dev.epicgames.com/documentation/en-us/unreal-engine/getting-started-with-mesh-texture-color-painting-in-unreal-engine) a bit in unreal and thought “Oh I’ll just flip this virutal texture box” I have a pretty good pc (4090 24gb) I’m surprised its taking so 5+ minutes (but then again I’ve made NO effort to optimize any of my materials… That being said… I guess I made […]

Touch ups

I spent the day listening to old gorillaz youtube videos while working. Once I hopped on abelton I tried re-creating “welcome to the world of the plastic beach” by memory: It turned out better than I thought after I compared it to the original. (You may notice the beeps from rhinestone Eyes I somehow got […]