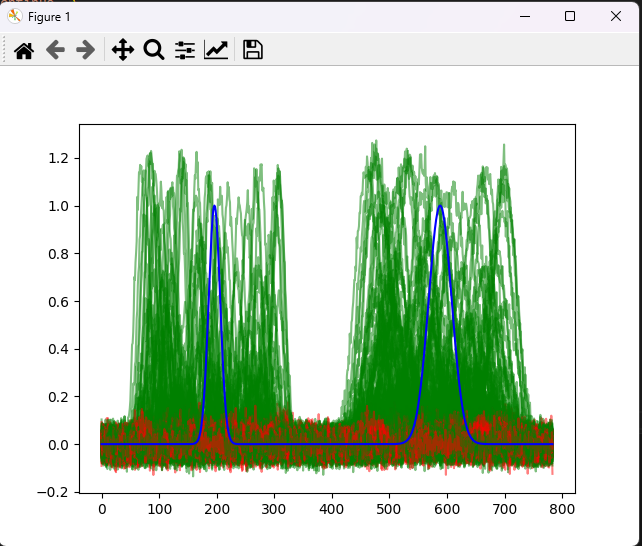

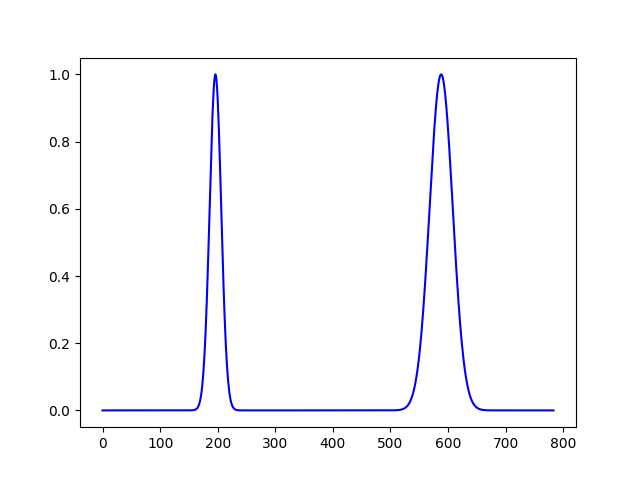

I got some feedback from my last post which basically said: 1.) “Why didn’t you test against different signals? “ 2.) “The signal I chose was too match against was pretty simple, you should do a continuous function” 3.) Why wouldn’t you make a subfunction to detect something like a pulse (containing something like a […]

Using ML to make a well known and established DSP techniques harder

I decided to bite the bullet and startup learning pytorch(https://pytorch.org/) and basic neural net creation (Also this was a reason I dropped $2000ish on a 4090 a year ago and with the promise I could use it to do things like this). After the basic letter recognition tutorial (here) I decided I wanted to make […]