I decided to bite the bullet and startup learning pytorch(https://pytorch.org/) and basic neural net creation (Also this was a reason I dropped $2000ish on a 4090 a year ago and with the promise I could use it to do things like this). After the basic letter recognition tutorial (here) I decided I wanted to make a 1-d signal recognition tutorial.

Here’s the plan:

- Make a simple signal

- Make a simple neural network

- Train an AI to detect the signal in noise

- Test the neural net with data (Ideally in real time)

From this I can surmise I would need a few python scripts for generating test data, training the model, defining the model and some kind of simulator to test out the model. Ideally with a plot running in real time for a cool video.

Quick aside: If you see “Tensor” it isn’t a “Tensor (https://en.wikipedia.org/wiki/Tensor)” It seems more like a grammar replacement for a multi-dimensional matrix? To my knowledge there’s no size or value checks for linearity when you convert a numpy array to a tensor in pytorch so I’m guessing there’s no guarantee of linearity? (or maybe its because every element is a real constant I pass the checks every time?)

Make a simple signal

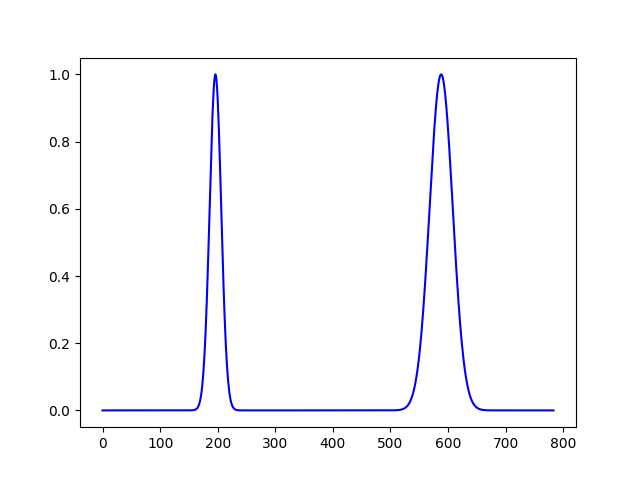

The signal I chose as my test signal was two Gaussian curves across 768 samples. Making this with numpy (which is much, much worse than matlab by the way, why isn’t there an rms function??? https://github.com/numpy/numpy/issues/15940 ).

Essentially its 10ish lines of code

def ideal_signal():

return_size = 784

x = np.arange(return_size)

# Gaussian parameters

mu1, sigma1 = 196, 10 # center=100, std=10

mu2, sigma2 = 588, 20 # center=200, std=20

# Create two Gaussian curves

gauss1 = np.exp(-0.5 * ((x - mu1) / sigma1) ** 2)

gauss2 = np.exp(-0.5 * ((x - mu2) / sigma2) ** 2)

# Combine them into one vector

vector = gauss1 + gauss2

return vector/vector.max()

Easy Enough now onto harder things

Make a simple neural network

So making the network is obviously the most tricky part here. You can easily mess up if you don’t understand the I/O of the network. Also choosing the network complexity seems to currently be some kind of hidden magic that is wielded by PHDs. In my case I had the following working for me:

- My Input vector size will ALWAYS be 768 (No need to detect/truncate any features)

- My Output Vector will ALWAYS be a binary (either a yes or no whether or not the signal is present)

So this makes my life a bit easier in selection. The first layer of the neural network will be sized of 768, then a hidden layer of 128 then an output of 2. Why 128 a hidden layer of 128? I have no idea, this is where I’m lacking knowledge wise, my original though was that the “features” that I was looking for would fit into a 128 sample window but as I’ve progressed I realized that is a poor assumption to choose your hidden layer. My guess is that I’m doing too much (math/processing wise) but this assumption that I made upfront seems to have worked for me.

Train an AI to detect the signal in noise

So this is the bulk of the work here. The idea was to generate a bunch of signals that can be used to represent the span of real world signals a detector such as this would see. Therefore you have pretty much three parameters to mess with, signal delay (or number of samples to shift), signal SNR, and system noise floor. Technically you do not need system noise floor, I have it in there as a parameter anyways but I wanted to facilitate customization of any scripts I made for later. In my case I kept system noise floor constant at -30dB.

Essentially the pseudo code for generating signals is

1.) Take the ideal signal made above

2.) Apply a sample delay (positive or negative) to a limit so we don’t loose the two Gaussian peaks

3.) Apply noise at a random SNR within a bounded limit (in my case it was -10dB to 10dB)

4.) Threshold the signal to be a positive when the SNR I applied to the noise is above where I expect a detection.

The size of the training data, again I had no idea what I was doing here so I just guess 50000 signals to shove into the neural net? In reality this was a guess and check process to see if I under-trained/over-trained as I tested the model (I’m writing this after getting a working model which is uncommon for most of these posts). Also the training quality is also arbitrary here, I just kept running #’s until things worked: Kind of lame I admit but I have a 4090 and training takes 15 seconds so I have that luxury here.

Test the neural net with data (Ideally in real time)

To build out the simulator I wanted I basically took a bunch of the generation code from the training data and threw it into a common functions wrapper for re-use. My end artifact was to make a simple pyqt (https://wiki.python.org/moin/PyQt) app that had buttons for starting and starting a simulation and sliders for sample offset and for signal SNR. Then I would make an indicator if the neural net detected the signal or not. The only difficulty with this is mostly just dealing with async programming (which is a difficulty with all AI). My solution here was to have the main thread run the QT gui, then spawn a background thread to do AI work. The main thread would then generate the data and do a thread safe send (using pyqtsignal) to the background thread with the data after plotting. The background thread then processes the incoming data using the GPU and will send a positive or negative signal back to the main app to change the line color green for detected and red for not detected.

Results

(Top slider is sample offset, bottom slider is SNR)

The plot above is locked to 20 fps and all of the calls to the neural net on the gpu return well before the frame is finished drawing. Its pretty surprising this worked out as well as it did. However there are definitely issues with hard coupling of the signal being centered. I honestly still do NOT thing this is anywhere near what you would want for any critical systems (Black boxing a bunch of math in the middle seems like a bad idea). However for a quick analysis tools I can see this being useful if packaged in a manner that was for non-dsp knowledgeable engineers.

Other Notes Issues/Things I Skipped

I did many more iterations I didn’t write about here. I had issues with model sizing, training data types, implementing a dataset compatible with pytorch, wierd plotting artifacts etc.

Future work I want to do in this space:

- Get better at understanding neural net sizing, I feel like I went arbitrary here which I’m not a fan of

- Try to make the neural net more confident in where there is NO signal. The neural net dosen’t return a pure binary signal, it returns a probability of no signal or a probability of signal. When you see a red signal its really checking if the “Yes” probability is greater than the “no” probability AND if the “Yes” probability is greater than 60%. Ideally you would see values such as [0.001 0.99] when there is a signal present and [0.99 0.001] when there’s no signal. However, the “NO” signal probability seems to hover at 40-50% constantly and when there’s low SNR the probabilities are both around 50% which is pretty much useless here.

Comparison to matched filtering

| My Neural Net | Full Convolution With Matched Filter | |

| Multiplies | 98560 | 589824 |

| Additions | 98430 | 589056 |

So on paper I guess this is “Technically” less work on the PC than a perfected matched filter response (i.e. auto-correlation). However, I think the problem here is that using a neural net to do something this simple is probably much more processing intensive than just making an filter that pulls out the content you want. But that being said if you had a high pressure situation and needed to do simple signal detection and just happened to have a free NPU in your system this could work.

The number of TOPs (see https://www.qualcomm.com/news/onq/2024/04/a-guide-to-ai-tops-and-npu-performance-metrics) that my neural net uses is ≈ 98560/10^12 = 9.856e-8 TOPs which is INCREDIBLY SMALL for most NPUs so most likeley I could operate this in any real time configuration (even on the cheapest possible qualcomm npu here: https://en.wikipedia.org/wiki/Qualcomm_Hexagon which as around 3 TOPS, to give a better comparison my 4090 runs 1300ish TOPS).

Next Steps

I want to still get better at building these so I think my next step in will be more tuned towards analysis of several signals, then trying to combine them and bin them into each category (pretty much like the pytorch tutorial but more deliberate). Also get the code on github… Code is on github here: https://github.com/wfkolb/ml_signal_detector/tree/main