I got some feedback from my last post which basically said:

1.) “Why didn’t you test against different signals? “

2.) “The signal I chose was too match against was pretty simple, you should do a continuous function”

3.) Why wouldn’t you make a subfunction to detect something like a pulse (containing something like a comms symbol) then apply it to a large context?

Valid points…

Testing different signals

Went through and updated the signal simulator to take in more than one type of signal.

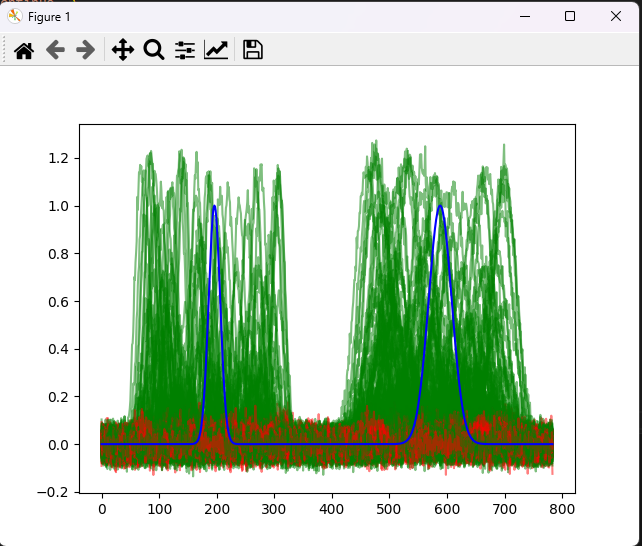

The results aren’t great:

(Again green is detected and red is not detected).

So this that tells me that the AI might be trained around the absence of pure noise rather than the detection of the signal! To fix this I expanded training to take in the signal types I made here (guassian, sine wave, sawtooth wave)/

I started with 20000 varying SNR signals for each type and the results seem good enough:

The edge cases seem to be the weirdest portion where each type seems to be marked as successful? To fix this I added a limit to the shift amount for detection to check just to see if I could get it to be seemingly perfect.

I think the issue is directly related to #2, where I’m not using a continuous function for detection so I’m essentially making the two “non-signals” close to looking like two guassian functions so the neural net detects them as positive.

Continuous functions

To enable this I need to re-write a bunch of the code. I also need to re-work how I’m identifying an “ideal” signal. I’ll jump back on this next week and show off the results, my hope is to essentially feed time data into the neural net and hopefully the neural net can determine frequency content as needed??

More to figure out next week.