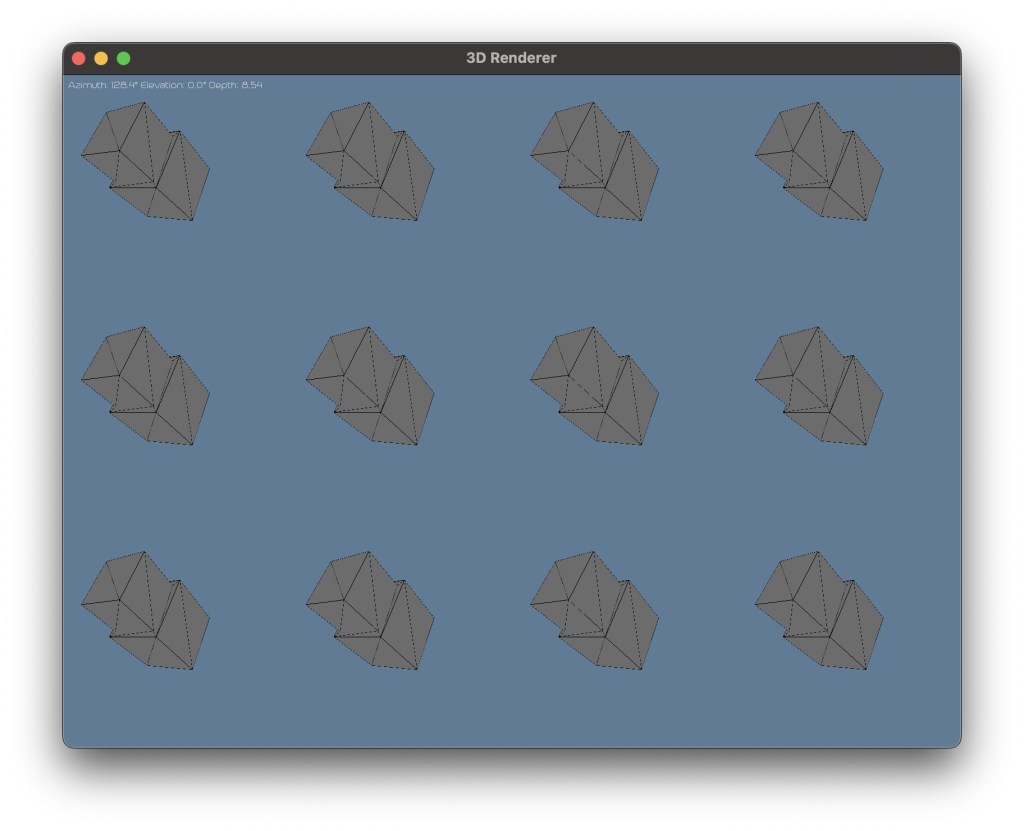

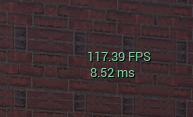

Fixed up the renderer to 1.) Use a perspective view instead of an isometric one (see https://www.bbc.co.uk/bitesize/guides/z6jkw6f/revision/4) 2.) Added in the ability to spawn several renderers with + and – with random delays on their rendering frames. So you can see the renderers are delayed with my mouse movements. Will I do more with this? […]

Getting better at telling AI what to do

I’ve been messing around with Claude code to see how fast I can spin up silly small projects. I also wanted to do a bit more in rust (https://rust-lang.org). The first one is a breakout clone using the egui library (they call libraries “crates” in rust to avoid sounding like python, and because the package […]

New website

I’ve been going crazy with ai coding tools the past week. Mostly I’ve been using GitHub copilot at work but I got sub of Claude code for personal use. My first project was to make a theme for this site, the old 2011 theme was getting kinda stale. I did put a bunch of work […]

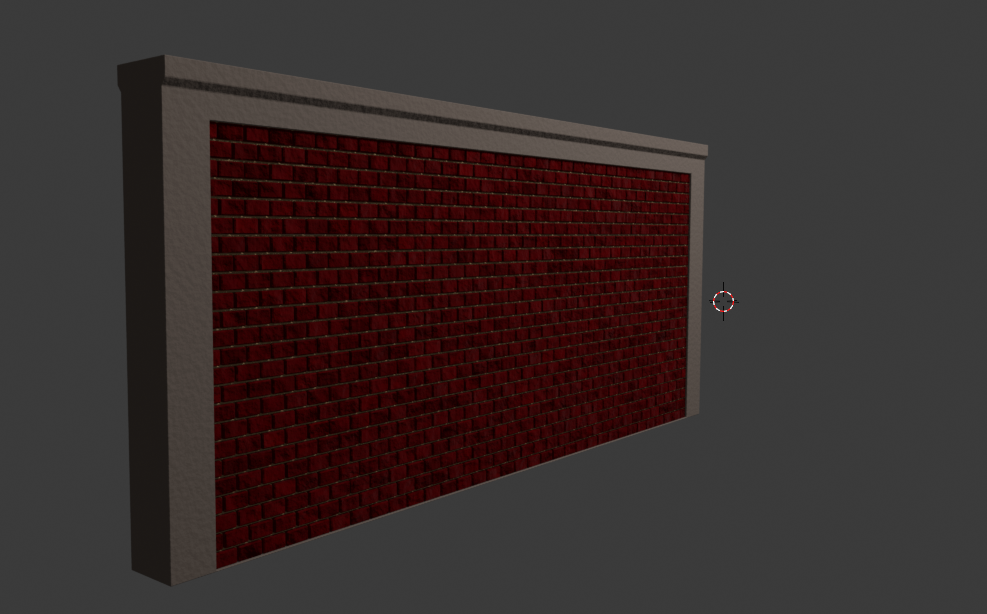

Brick by brick

Made a brick wall thats waaayyy to detailed to put in game: I did this after frustration from trying to make a brick wall just from a texture: Which looks much much worse. This was also my first major step into using geometry nodes in blender (see: https://docs.blender.org/manual/en/latest/modeling/geometry_nodes/index.html) which essentially let you make parameterized geometry […]

Rain Wilson

I added in rain and some mood lighting. There were also a bunch of other smaller changes but this is where I’m at. It’s very dark and the rain kinda looks like pills falling so I’ll probably need to do another pass here. I’m happy with the water material I setup here: Essentially its just […]

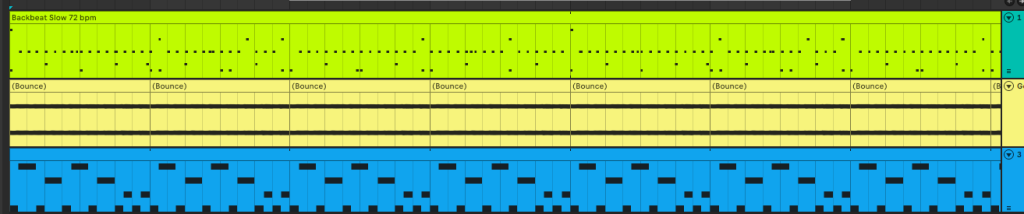

Loop-da loop

As it says in the title I’m pretty sure this is a beat from a cyberpunk 2077 track but I’m not sure which one…. Otherwise I added a sidechain compressor the way you’re “supposed” to in order to keep what you want emphasized within a bar emphasized. Essentially: Bass drum hits -> enable compressor (i.e. […]

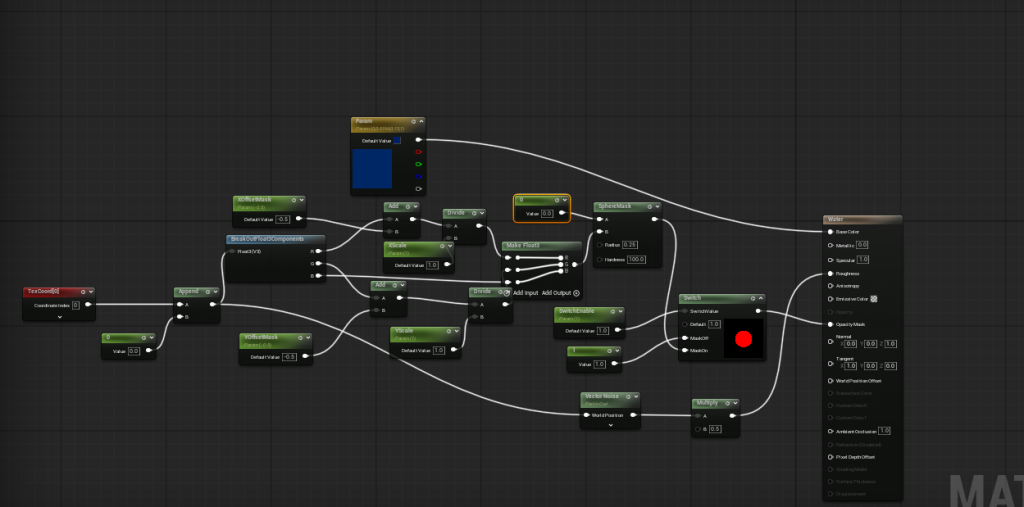

How (Not) to use materials in unreal

The borderlands 4 issues with unreal (see: https://www.pcgamer.com/games/fps/borderlands-4s-latest-patch-triggers-a-flurry-of-performance-complaints-but-gearbox-says-new-stuttering-problems-should-resolve-over-time-as-the-shaders-continue-to-compile/ ) made me realize I was doing all of my materials wrong. Originally the way I pulled in 3d meshes generated a material of each slot in the mesh. So I thougth I was being clever by using material slots to determine colors (so I didn’t have […]

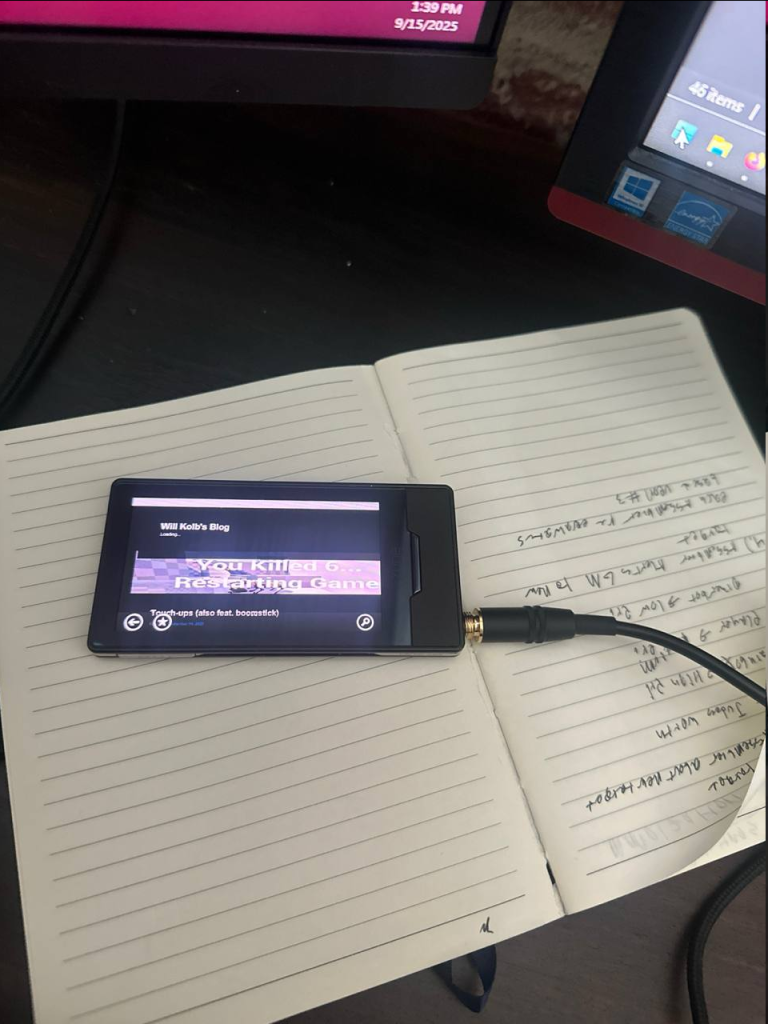

WillKolb.com: ZuneHD Compatible

I found my old zune hd and I’ve been messing around with it. I connected to the internet and surprisingly: The text doesn’t scale properly, which is because why on earth would any front end support 480×272. Makes me wonder if there’s a low res “reader mode” plugin/redirect I could get running. Also the Zune […]

Touch-ups (also feat. boomstick)

Got the double barreled shotgun in game: I also added in some tracers to make shooting a bit better: On the back-end side I re-worked how damage functions, so now there’s falloff for shots: (Note the damage numbers in the corner). This should make things a bit better in terms of longer range engagements. I […]

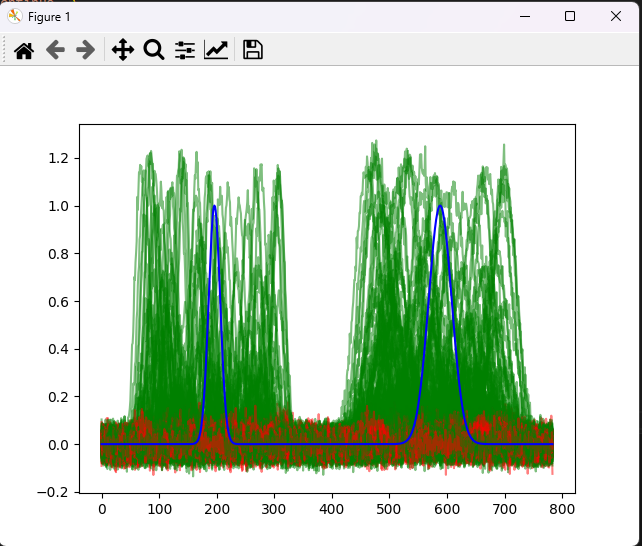

Using ML to make a well known and established DSP techniques harder (Part 2)

I got some feedback from my last post which basically said: 1.) “Why didn’t you test against different signals? “ 2.) “The signal I chose was too match against was pretty simple, you should do a continuous function” 3.) Why wouldn’t you make a subfunction to detect something like a pulse (containing something like a […]