This one is all lame presets and out of key stabs I need to add art to these on soundcloud so I feel like there’s enough effort put in. I was gonna do a generic album cover and came up with this: Where I did NONE of that using reference images or 3d model tools […]

“If the robot makes three beeps don’t stand in front of it.”

Threw the muzzle flash 3d model into the explosion so we get some kinda fire out of it. It works much better than I expected: Also attempted to make some light-bulbs that hang from the ceiling but it turns out the unreal provided cable component (https://dev.epicgames.com/documentation/en-us/unreal-engine/cable-components-in-unreal-engine) doesn’t apply physics forces to whatever it’s attached to. […]

The 5th rocket post

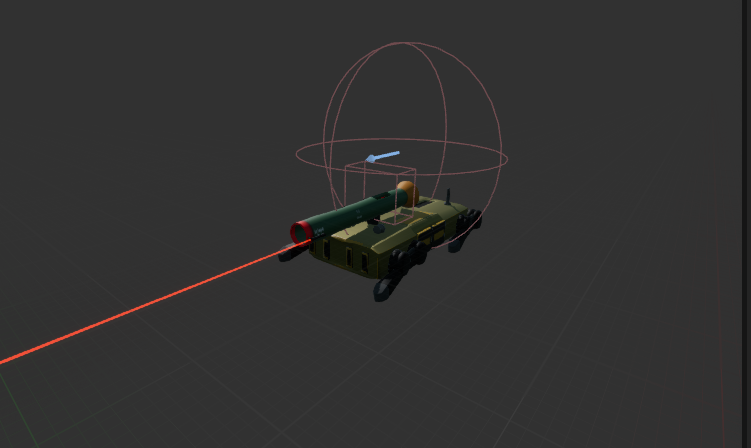

Added particles and sounds for rockets, also added a laser point to the rocket bot. I made the rocket sound myself by doing a lame attempt to copy the image here (idk the context I straight up just googled “Rocket Sound Spectrum”) I did this by making some white noise generators in Abelton and filtering […]

Sound-y Sound and Bot Deployments

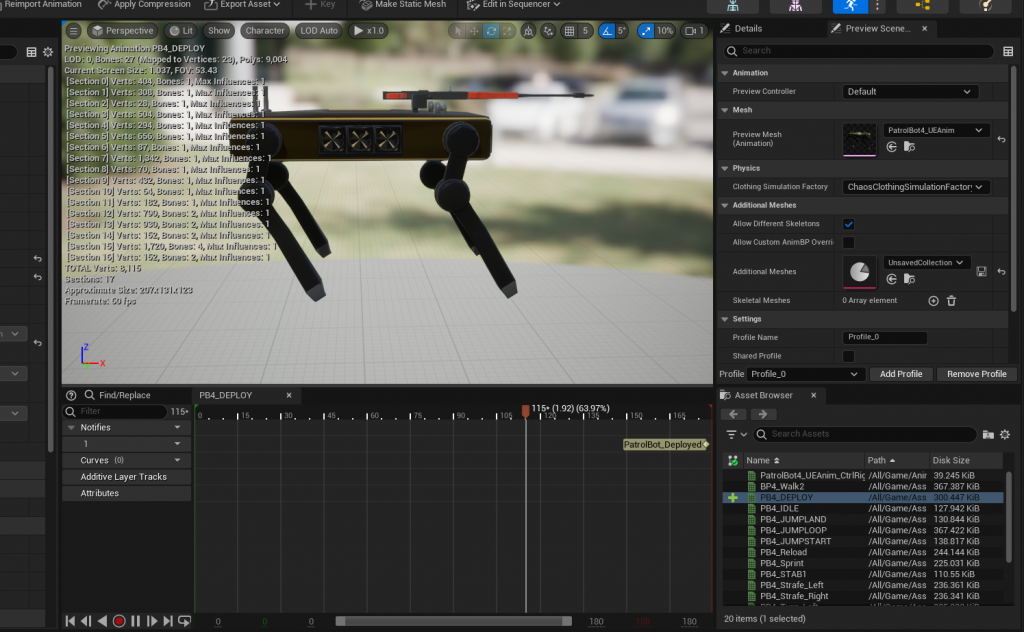

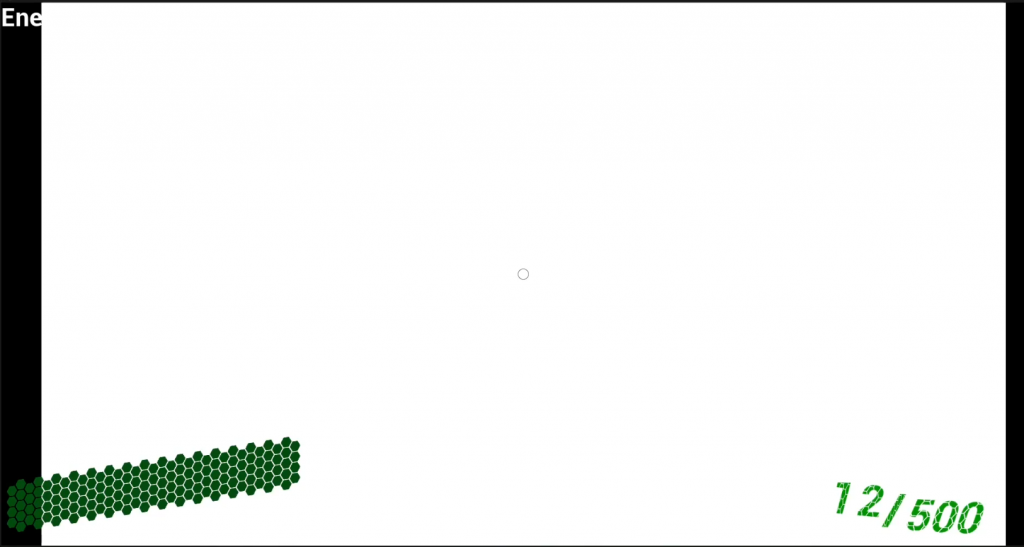

I swear the snare isn’t as prominent on my pc…. Added in ai perception logic (Also the hud is still crap and rockets fly through walls….) Right now I have the detection radius at like 1m so thats why I have to rub against the robot to get it standing up. This required some mods […]

Flashy Stuff feat. Loop

Flashbangs are working. I have the patrol bots hooked up to throw them based upon an enumeration (Which I dont like, I’d rather a subclass but I shot myself in the foot earlier on). I just hijacked the reload animation and basically said “if you’re a grenade bot, reload, and instead of throwing a mag […]

Loop-gardium Loop-iosa

Wanted to make a 200-ish bmp beat but I got bored and glitched them the hell out. You should NOT do this for any reason, normally it makes a horrid clippy sound, in this case I compressed and filtered it so it wasn’t that bad. The synth-y part is an Abelton meld preset with an […]

Tree part 3

Recording myself modeling another tree. Then I realized I probably shouldn’t be posting videos with copyrighted music, so I made a song to go along with the tree recording (I used some of the pre-canned abelton clips for bass and vocals which feels like cheating but I wanted something kinda alright and fast, the drums […]

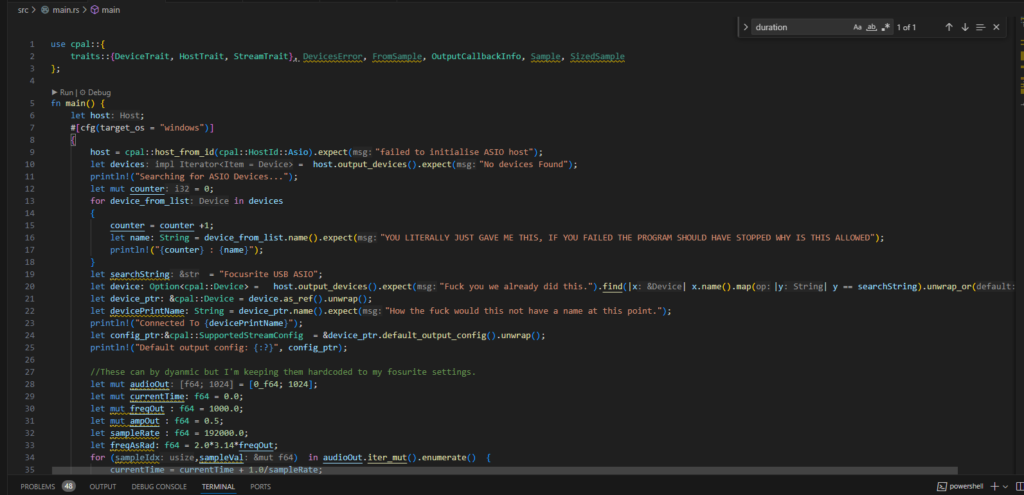

RUST

My friend is working on some stuff in rust and it’s popped up in my job a few times so I wrote an asio sine wave generator in rust to familiarize myself. FYI the code below is not debugged/cleaned up and definitely has stuff that can be taken out. Anyone who knows rust probably wont […]

Maybe I should have compressed this a bit more

Its cold and I’m unhappy so you can join my in this cacophony of GENERATIVE AUDIO! (Please turn your speakers down, this is the loudest thing I’ve put up and I don’t wanna re-upload) “How did you achieve this musically inclined symphony??” I’m glad you have asked this: 1.) Operator 2.) A Clipping and distorted […]

This time with less computer

I swear only the 808 was from abelton Green track is the kaoss pad (https://korgusshop.com/products/nts-3-programmable-effect-kit?_pos=3&_sid=9d3d6f0c3&_ss=r) , yellow is my bass, brown is from the po-20 (https://teenage.engineering/store/po-20?srsltid=AfmBOopbNWwmoK9D7lDfaQzFf8onkUlwYCFjm5SLCwQ32HzNQitBiizg, gift from my brother) , peach, tan and pink are all from the horner instructor 32 (https://hohner.de/en/instruments/melodica/student-melodicas/student-32-melodica I can only find the student version I guess I got a […]