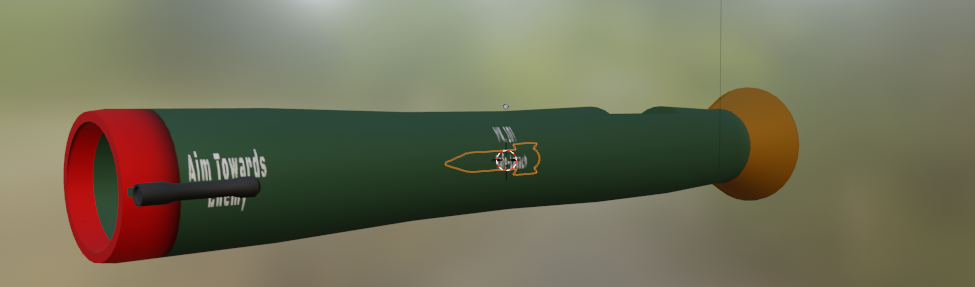

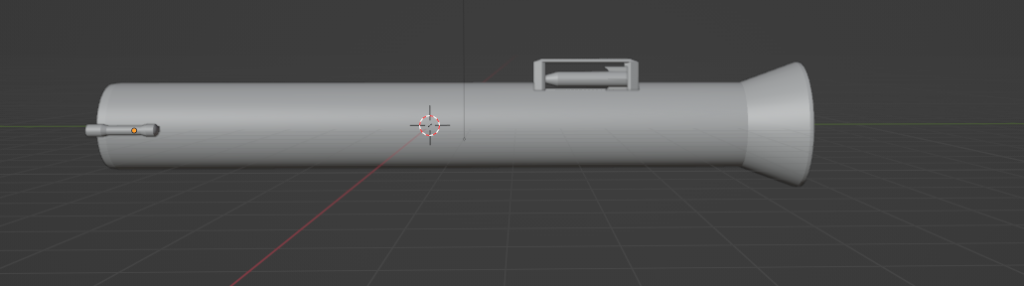

Gameplay isn’t done yet but I got some paint setup on the rocket launcher and the rocket. Threw a bunch of random phrases+numbers on it, still looks pretty cartoon-y (but so does everything atm). Next step is to get the firing + reload sequence setup.

Misc.

Added a parameter to scale the grenade explosion radius so you kinda see where you’ll get hit: Its setup via user parameters and HLSL which I havent used in a while (I was fluent in the xna days, but that’s pushing 10+ years ago now) I might dig into this more just to get my […]

UE Control rigs and Custom Numpad setup

I kept looking at the last video I posted and got really annoyed at how bad the animations of the patrol bot looked. This drove me to remake the animation rig for the patrol bot (to be much, much simpler) and I started making a control rig in unreal to push the animations there (see […]

Some Texture Painting / Raspberry Pi fun

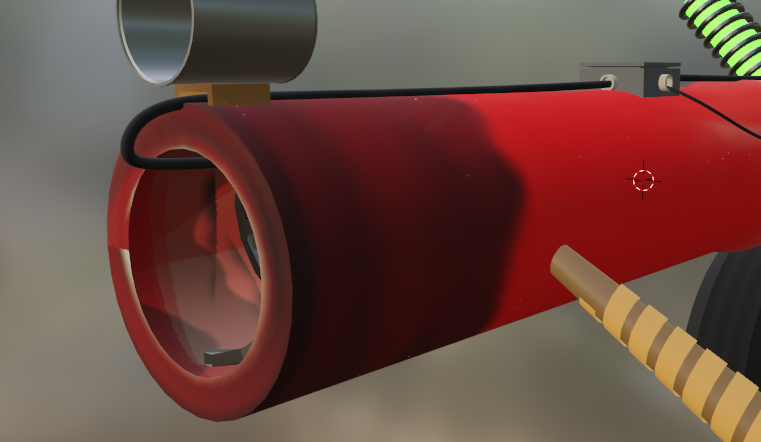

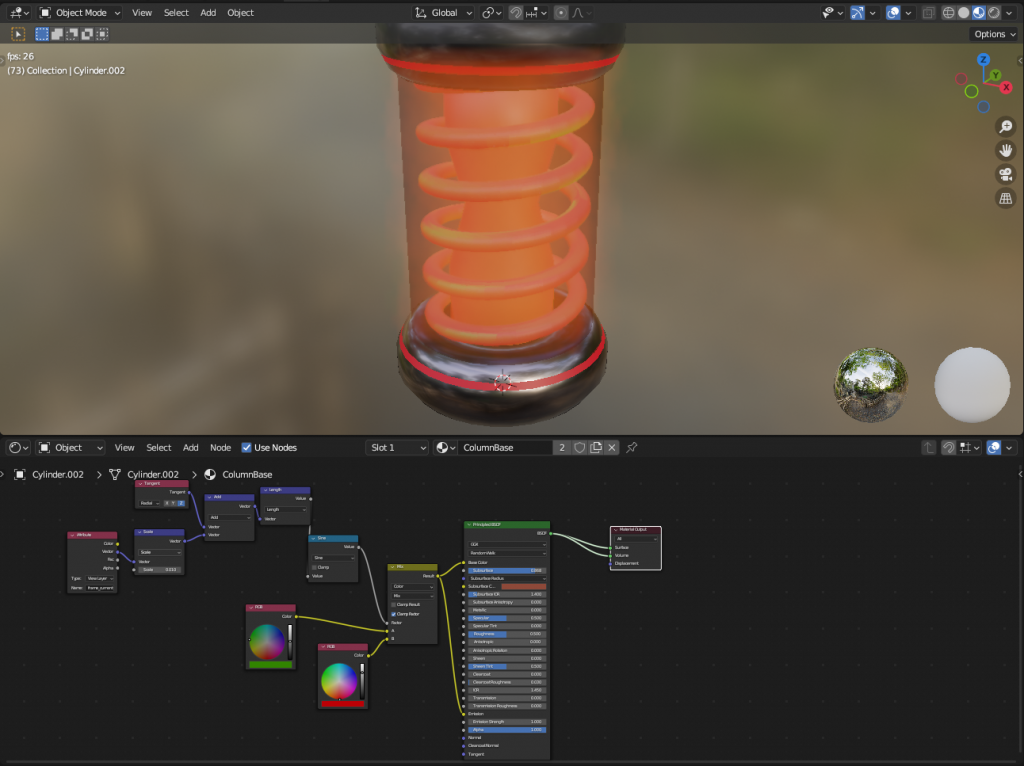

Attempted to add some color to the zapper I showed earlier. I’m not 100% enthusiastic about the job I did but I still like some of the ideas I have here. For the record this is how you setup a shader in blender for texture Paint: Essentially you make a shader that you like as […]

Zapperz

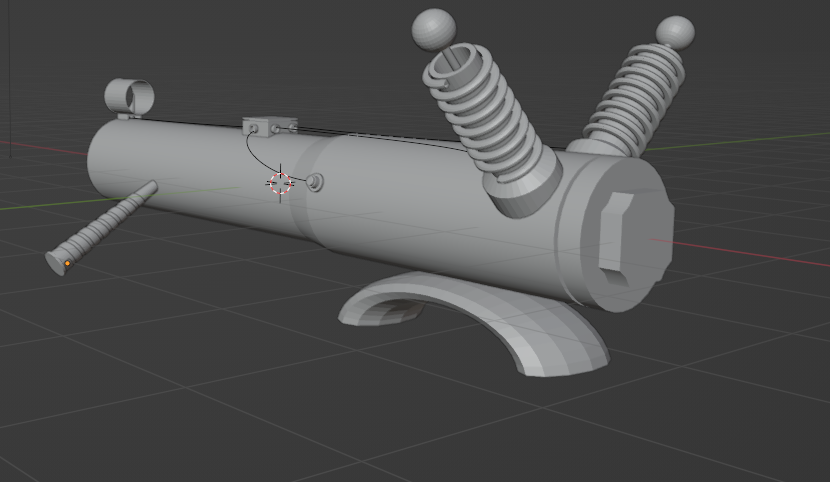

Not sure what this is but I think I might keep rolling and texture it to see where it can fit in to future projects. Other angles: The pole out the side is supposed to be the end of a baseball bat, the curve underneath I wanted to be some kind of road tire.The sights […]

….What is it?

I made this thing: I don’t know what it is but its neat. The column+coil is built around taking the animation frame counter, doing some math to make a sine-wave and using that as a blend factor between a few colors. Here’s a better zoom. Not much more to show of this, the base is […]

Tree part 3

Recording myself modeling another tree. Then I realized I probably shouldn’t be posting videos with copyrighted music, so I made a song to go along with the tree recording (I used some of the pre-canned abelton clips for bass and vocals which feels like cheating but I wanted something kinda alright and fast, the drums […]

More Tree For Thee

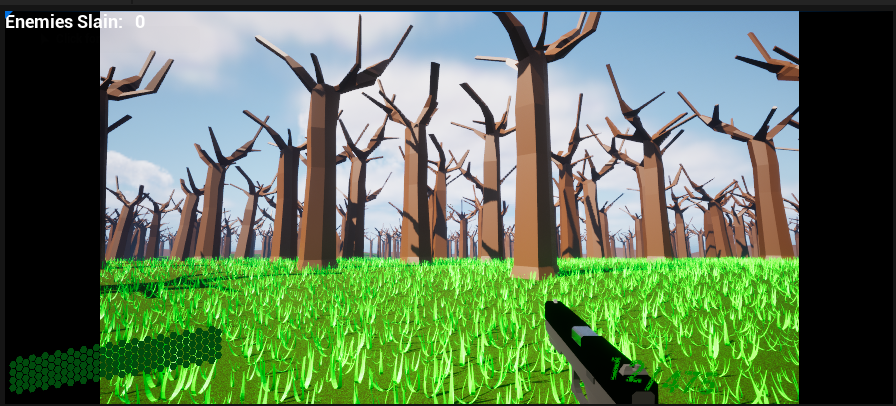

I made a better tree, not perfect (it still has the weird branch in the upper left) but its more passable than the other tree I made. I also found in unreal the “Foliage” system ( see https://dev.epicgames.com/documentation/en-us/unreal-engine/procedural-foliage-tool-in-unreal-engine ) which is the opposite of the “Landscape” system used for grass. Essentially it lets you spawn […]

THE WOODS!

Jumped back into unreal but I’m heavily procrastinating the game parts so I started messing with some of the environment tools in unreal. Specifically I’ve been poking at the grass setup tools (https://dev.epicgames.com/documentation/en-us/unreal-engine/grass-quick-start-in-unreal-engine). Not too complicated essentially, your environment drives a few inputs and outputs in your landscape material. So I whipped up a quick […]

Maybe I should have compressed this a bit more

Its cold and I’m unhappy so you can join my in this cacophony of GENERATIVE AUDIO! (Please turn your speakers down, this is the loudest thing I’ve put up and I don’t wanna re-upload) “How did you achieve this musically inclined symphony??” I’m glad you have asked this: 1.) Operator 2.) A Clipping and distorted […]